SpringCloud整合ELK实现日志收集

ELK中各个服务的作用

- ElasticSearch:用于存储收集到的日志

- Logstash:用于收集日志,SpringCloud整合Logstash后会把日志发送到Logstash, Logstash再把日志转发给ElasticSearch

- Kibana:可视化界面查看日志

搭建ELK

创建配置文件

input {

tcp {

mode => "server"

host => "0.0.0.0"

port => 4560

codec => json_lines

}

}

output {

elasticsearch {

hosts => "es:9200"

index => "springcloud-appname-%{+YYYY.MM.dd}"

}

}

Docker-compose搭建ELK环境

version: '2.2'

services:

es01:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

container_name: es01

environment:

- node.name=es01

- cluster.name=elasticsearch

- discovery.seed_hosts=es02,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data01:/usr/share/elasticsearch/data

ports:

- 9200:9200

networks:

- elastic

es02:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

container_name: es02

environment:

- node.name=es02

- cluster.name=elasticsearch

- discovery.seed_hosts=es01,es03

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data02:/usr/share/elasticsearch/data

ports:

- 9201:9201

networks:

- elastic

es03:

image: docker.elastic.co/elasticsearch/elasticsearch:7.6.2

container_name: es03

environment:

- node.name=es03

- cluster.name=elasticsearch

- discovery.seed_hosts=es01,es02

- cluster.initial_master_nodes=es01,es02,es03

- bootstrap.memory_lock=true

- "ES_JAVA_OPTS=-Xms512m -Xmx512m"

ulimits:

memlock:

soft: -1

hard: -1

volumes:

- data03:/usr/share/elasticsearch/data

ports:

- 9202:9202

networks:

- elastic

kib01:

image: docker.elastic.co/kibana/kibana:7.6.2

container_name: kib01

ports:

- 5601:5601

environment:

ELASTICSEARCH_URL: http://es01:9200

ELASTICSEARCH_HOSTS: http://es01:9200

networks:

- elastic

logstash:

image: docker.elastic.co/logstash/logstash:7.6.2

container_name: logstash

volumes:

- /Users/wutao/Documents/data/www/java/elk/logstash/logstash-springcloud.conf:/usr/share/logstash/pipeline/logstash.conf #挂载logstash的配置文件

depends_on:

- elasticsearch #kibana在elasticsearch启动之后再启动

ports:

- 4560:4560

networks:

- elastic

volumes:

data01:

driver: local

data02:

driver: local

data03:

driver: local

networks:

elastic:

driver: bridge

启动ELK

docker-compose up -d

进入logstash容器并安装json_lines插件

# 进入logstash容器

docker exec -it logstash bash

#安装插件

/bin/logstash-plugin install logstash-codec-json_lines

# 退出容器

exit

# 重启容器

docker restart logstash

SpringCloud项目集成Logstash

pom.xml文件中添加logstash-logback-encoder依赖

<dependency>

<groupId>net.logstash.logback</groupId>

<artifactId>logstash-logback-encoder</artifactId>

</dependency>

编写xml配置文件让logback的日志输出到logstash

<?xml version="1.0" encoding="UTF-8"?>

<!DOCTYPE configuration>

<configuration>

<include resource="org/springframework/boot/logging/logback/defaults.xml"/>

<include resource="org/springframework/boot/logging/logback/console-appender.xml"/>

<!--应用名称-->

<property name="APP_NAME" value="your-app-name"/>

<!--日志文件保存路径-->

<property name="LOG_FILE_PATH" value="${LOG_FILE:-${LOG_PATH:-${LOG_TEMP:-${java.io.tmpdir:-/tmp}}}/logs}"/>

<contextName>${APP_NAME}</contextName>

<!--每天记录日志到文件appender-->

<appender name="FILE" class="ch.qos.logback.core.rolling.RollingFileAppender">

<rollingPolicy class="ch.qos.logback.core.rolling.TimeBasedRollingPolicy">

<fileNamePattern>${LOG_FILE_PATH}/${APP_NAME}-%d{yyyy-MM-dd}.log</fileNamePattern>

<maxHistory>30</maxHistory>

</rollingPolicy>

<encoder>

<pattern>${FILE_LOG_PATTERN}</pattern>

</encoder>

</appender>

<!--输出到logstash的appender-->

<appender name="LOGSTASH" class="net.logstash.logback.appender.LogstashTcpSocketAppender">

<!--可以访问的logstash日志收集端口-->

<destination>your-ip:4560</destination>

<encoder charset="UTF-8" class="net.logstash.logback.encoder.LogstashEncoder"/>

</appender>

<root level="INFO">

<appender-ref ref="CONSOLE"/>

<appender-ref ref="FILE"/>

<appender-ref ref="LOGSTASH"/>

</root>

</configuration>

公共包中添加日志切面

package ****.component;

import cn.hutool.core.util.StrUtil;

import cn.hutool.core.util.URLUtil;

import cn.hutool.crypto.digest.BCrypt;

import cn.hutool.json.JSONUtil;

import ****.WebLog;

import io.swagger.annotations.ApiOperation;

import org.aspectj.lang.JoinPoint;

import org.aspectj.lang.ProceedingJoinPoint;

import org.aspectj.lang.Signature;

import org.aspectj.lang.annotation.*;

import org.aspectj.lang.reflect.MethodSignature;

import org.slf4j.Logger;

import org.slf4j.LoggerFactory;

import org.springframework.core.annotation.Order;

import org.springframework.stereotype.Component;

import org.springframework.util.StringUtils;

import org.springframework.web.bind.annotation.RequestBody;

import org.springframework.web.bind.annotation.RequestParam;

import org.springframework.web.context.request.RequestContextHolder;

import org.springframework.web.context.request.ServletRequestAttributes;

import javax.servlet.http.HttpServletRequest;

import java.lang.reflect.Method;

import java.lang.reflect.Parameter;

import java.util.ArrayList;

import java.util.HashMap;

import java.util.List;

import java.util.Map;

/**

* @author 莫轩然

* @date 2020-01-07 10:08

*/

@Aspect

@Component

@Order(1)

public class WebLogAspect {

private static final Logger LOGGER = LoggerFactory.getLogger(WebLogAspect.class);

@Pointcut("execution(public * your-controller..*.*(..))")

public void webLog(){

}

@Before("webLog()")

public void doBefore(JoinPoint joinPoint) throws Throwable {

}

@AfterReturning(value = "webLog()", returning = "ret")

public void doAfterReturning(Object ret) throws Throwable {

}

@Around("webLog()")

public Object doAround(ProceedingJoinPoint joinPoint) throws Throwable {

long startTime = System.currentTimeMillis();

//获取当前请求对象

ServletRequestAttributes attributes = (ServletRequestAttributes) RequestContextHolder.getRequestAttributes();

HttpServletRequest request = attributes.getRequest();

//记录请求信息

WebLog webLog = new WebLog();

Object result = joinPoint.proceed();

Signature signature = joinPoint.getSignature();

MethodSignature methodSignature = (MethodSignature) signature;

Method method = methodSignature.getMethod();

if (method.isAnnotationPresent(ApiOperation.class)) {

ApiOperation apiOperation = method.getAnnotation(ApiOperation.class);

webLog.setDescription(apiOperation.value());

}

long endTime = System.currentTimeMillis();

String urlStr = request.getRequestURL().toString();

webLog.setBasePath(StrUtil.removeSuffix(urlStr, URLUtil.url(urlStr).getPath()));

webLog.setIp(request.getRemoteUser());

webLog.setMethod(request.getMethod());

webLog.setParameter(getParameter(method, joinPoint.getArgs()));

webLog.setResult(result);

webLog.setSpendTime((int) (endTime - startTime));

webLog.setStartTime(startTime);

webLog.setUri(request.getRequestURI());

webLog.setUrl(request.getRequestURL().toString());

LOGGER.info("{}", JSONUtil.parse(webLog));

return result;

}

/**

* 根据方法和传入的参数获取请求参数

*/

private Object getParameter(Method method, Object[] args) {

List<Object> argList = new ArrayList<>();

Parameter[] parameters = method.getParameters();

for (int i = 0; i < parameters.length; i++) {

//将RequestBody注解修饰的参数作为请求参数

RequestBody requestBody = parameters[i].getAnnotation(RequestBody.class);

if (requestBody != null) {

argList.add(args[i]);

}

//将RequestParam注解修饰的参数作为请求参数

RequestParam requestParam = parameters[i].getAnnotation(RequestParam.class);

if (requestParam != null) {

Map<String, Object> map = new HashMap<>();

String key = parameters[i].getName();

if (!StringUtils.isEmpty(requestParam.value())) {

key = requestParam.value();

}

if("password".equals(key)){

args[i] = BCrypt.hashpw(args[i].toString());

}

map.put(key, args[i]);

argList.add(map);

}

}

if (argList.size() == 0) {

return null;

} else if (argList.size() == 1) {

return argList.get(0);

} else {

return argList;

}

}

}

@Data

public class WebLog {

/**

* 操作描述

*/

private String description;

/**

* 操作用户

*/

private String username;

/**

* 操作时间

*/

private Long startTime;

/**

* 消耗时间

*/

private Integer spendTime;

/**

* 根路径

*/

private String basePath;

/**

* URI

*/

private String uri;

/**

* URL

*/

private String url;

/**

* 请求类型

*/

private String method;

/**

* IP地址

*/

private String ip;

/**

* 请求参数

*/

private Object parameter;

/**

* 请求返回的结果

*/

private Object result;

}

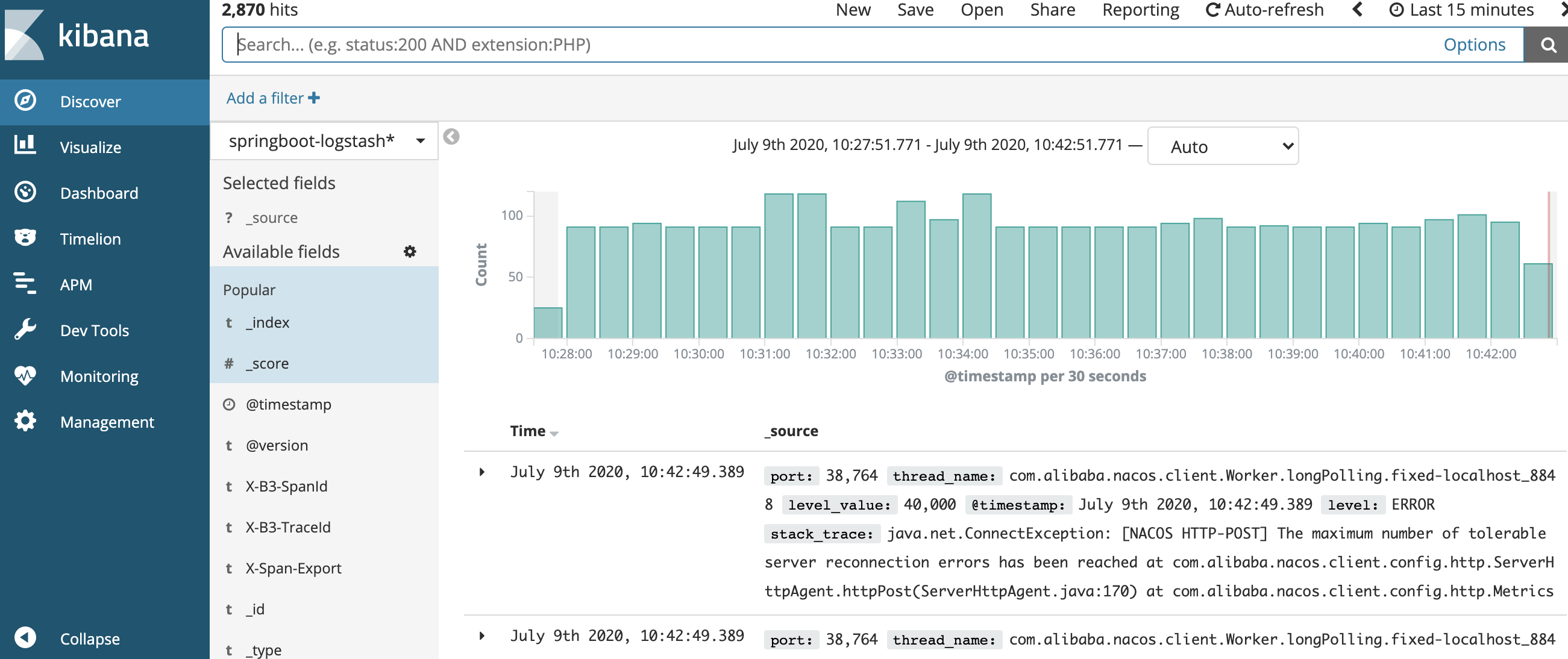

效果图